This page lists past and present projects that I deem worthy of documenting. Some projects have been strict research publications whereas others began in the began as musing and morphed into something worthwhile. Many project listings lead to a larger page with more information. As always, this page is under construction, I'd like to add some sorting for various types of projects.

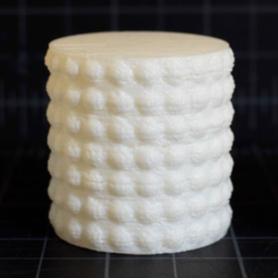

3D printing renders a digital form into physcial, extruded plastic. But the physical form also has haptic characteristics that contribute to the feel of an object. HapticPrint is a series of tools that uses manufacturing artifacts in the 3D printing process to control the texture, compliance, and weight of an object. Our internal tool readily converts a 2D image into a 3D texture. The 3D tools allows the user to control the compliance and patterns in verious directions.

Fluid User Interfaces, Fl.UIs, are touch interactions formed with passive, unpowered hardware and an overhead computer vision system. The easy-to-build touch surfaces move colored liquid in response to a user's touch, which is easily detected with a camera. Our paper describes methods of constructing the hardware, calibrating the vision system, and the various interactive elements possible with Fl.UIs.

Cesar Torres and I presented this work as a note at TEI 2015.

When building physical skills or following instructional content, users are often left to interpret skills. FabSense attempts to recognize workshop activity and classify both quantitative actions as well as qualities of that action. We use a single ring-worn Inertial Measurement Unit (IMU) to capture data and a machine learning approach to recognizing activity. We performed a simple wood working experiment with 5 tools and 15 users and achieved user-independent classification accuracy of 82%.

This work is published as a technical report through UC Berkeley EECS.